Monetizing AI at the Enterprise Edge

A Guide to Gaining Competitive Advantage Through Edge Computing

Executive Summary

In today’s rapidly evolving technological landscape, enterprise business leaders are constantly seeking new ways to leverage data for competitive advantage. While cloud-based AI has dominated headlines, a quiet revolution is taking place at the edge of networks where data is generated. Edge AI, the deployment of edge computing, especially for AI Inference capabilities, directly at the point of data creation, represents the next frontier in enterprise innovation.

This guide explores how large enterprises can monetize their proprietary data through edge computing infrastructure purposefully designed for AI. By bringing computation closer to data sources, organizations unlock real-time insights, enhance security, reduce latency, and create entirely new revenue streams that were previously impossible with centralized cloud architectures.

According to Gartner1 , by 2029, at least 60% of edge computing deployments will utilize composite AI (both predictive and generative AI), compared to less than 5% in 2023. This explosive growth represents both an opportunity and a challenge for enterprise leaders who must navigate the complexities of implementing Edge AI at scale.

This document will provide you with a comprehensive understanding of Edge AI inference, its differences from cloud-based AI training, the unique architectural requirements, financial benefits, industry use cases, implementation challenges, and a practical roadmap for successful deployment.

Why Edge Computing Has Become Critical for Modern AI

Traditional cloud-centric AI architectures face fundamental limitations when applied to many real-world business scenarios:

- Latency Constraints: Mission-critical applications like autonomous vehicles, industrial safety systems, predictive maintenance, computer vision, and real-time financial fraud detection cannot tolerate the delay introduced by sending data to distant cloud data centers for inference processing.

- Bandwidth Limitations: The sheer volume of data generated by modern sensors, cameras, and IoT devices makes transmitting all raw data to the cloud economically unfeasible and technically challenging.

- Connectivity Requirements: Many operational environments experience intermittent connectivity, are in secure air gapped environments, or operate in locations where reliable network access is unavailable.

- Data Privacy & Sovereignty: Increasingly stringent regulations around data privacy and sovereignty make processing sensitive data locally a necessity rather than a choice.

- Operational Costs: Transmitting and storing raw data in the cloud creates ongoing expenses that can quickly erode ROI for data-intensive applications.

Edge computing addresses these challenges by bringing AI inference, with the process of using trained AI models to make predictions or decisions, directly to where data originates.

This architectural shift fundamentally changes what’s possible with enterprise AI. As noted in Gartner’s recent research1 , “By 2029, 50% of enterprises will be using edge computing, up from 20% in 2024.” This dramatic shift underscores the growing recognition that edge processing is essential for the next generation of AI-powered business applications.

By deploying an edge computing platform to enable Edge AI Inference at the edge, enterprises can:

- Process data in milliseconds rather than seconds or minutes or days

- Operate effectively in bandwidth-constrained environments

- Function reliably with intermittent cloud connectivity

- Maintain greater control over sensitive data

- Dramatically reduce data transmission and storage costs

These capabilities unlock use cases that would be impractical or impossible with purely cloud-based approaches, creating new opportunities for enterprises to monetize their proprietary data assets

Edge Computing vs. Cloud for AI: Understanding the Differences

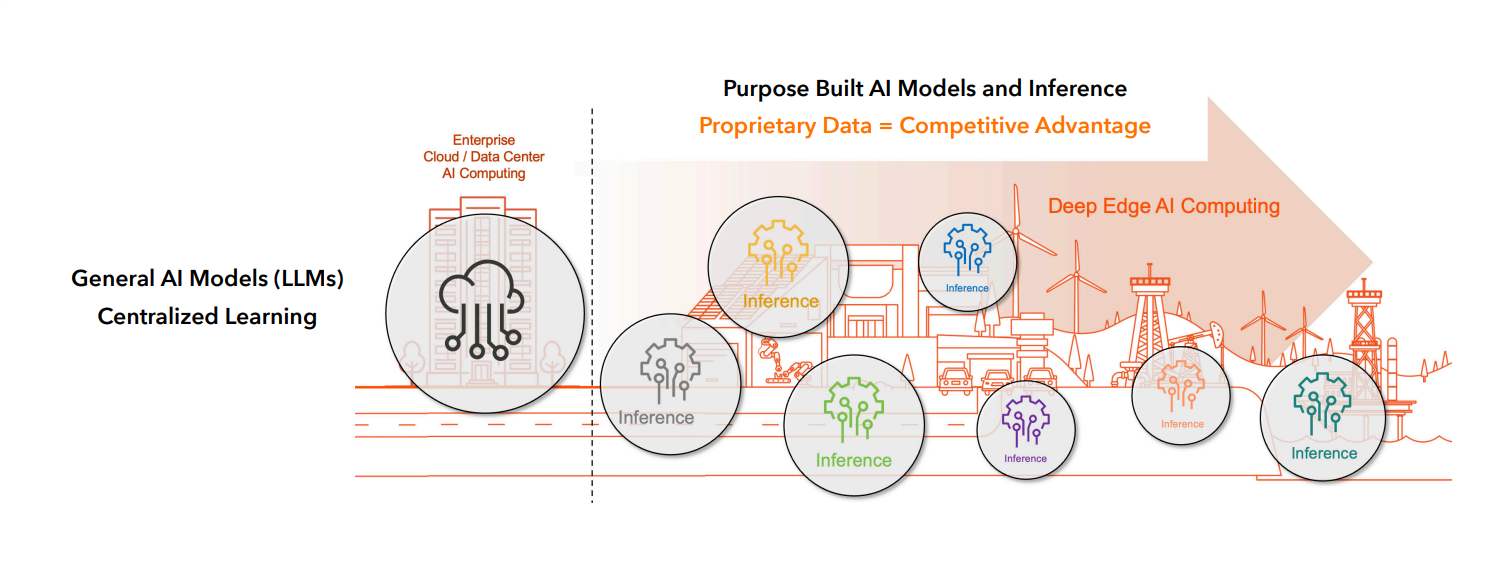

To fully appreciate the edge computing revolution in AI, it’s essential to understand the fundamental differences between AI training, typically performed in centralized data centers and AI inference, increasingly deployed at the edge.

AI Training (Centralized):

- Resource-Intensive: Requires massive computational resources (GPUs/TPUs) for extended periods

- Data Aggregation: Utilizes large datasets collected from many sources

- Batch Processing: Operates on historical data in non-time-critical manner

- Scalable Compute: Leverages elastic cloud resources to scale as needed

- High Power Consumption: Typically requires industrial cooling and power infrastructure

- Infrequent Process: Models are trained periodically, not continuously

AI Inference (Edge):

- Resource-Efficient: Optimized to run on constrained hardware with limited power

- Low Latency: Designed for near-instantaneous response times

- Stream Processing: Operates on real-time data streams

- Localized Computation: Functions within available local resources

- Energy Efficiency: Must operate within strict power envelopes

- Continuous Operation: Runs continuously in production environments

A Complementary Relationship

Edge AI and centralized AI are not competing approaches but complementary parts of a comprehensive AI strategy. Models are typically trained in centralized environments using accumulated data, then optimized and deployed to edge devices for inference. This hybrid approach combines the best of both worlds: the powerful learning capabilities of centralized systems with the real-time responsiveness of edge deployment.

Market Trends: The Edge AI Revolution

Key Insights:

- Deployments of Enterprise Edge Computing Infrastructure are growing rapidly.

- This growth is being driven by the desire to leverage local data for AI Inference.

- Edge AI solutions reduce downtime and deliver competitive advantage.

Gartner has been particularly bullish on the growth of edge AI, predicting that “by 2029, at least 60% of edge computing deployments will use composite AI (both predictive and generative AI), compared to less than 5% in 2023.” This represents a twelve-fold increase in just six years, underscoring the accelerating adoption of AI at the edge.

In their report “Market Guide for Edge Computing,” Gartner analysts note: “Edge computing is entering a new phase of enterprise adoption, driven by the need to support AI workloads outside traditional data centers. Organizations that fail to develop edge AI capabilities risk significant competitive disadvantage as real-time intelligence becomes table stakes in multiple industries.“

IDC’s research1 complements these findings, projecting that worldwide spending on edge computing will reach $274 billion by 2025, with AI-related edge deployments growing at a 19.6% CAGR—significantly faster than the overall edge market.

According to IDC’s Dave McCarthy, Research Vice President of Cloud and Edge Infrastructure Services: “The edge is becoming the center of gravity for data in many enterprises. As AI becomes embedded in business processes, the need to process this data locally—at the edge—becomes paramount for businesses seeking competitive advantage.“

Forrester2 has identified edge AI as one of the top emerging technologies for enterprise transformation. Their analysis indicates that “organizations deploying edge AI solutions report an average 35% improvement in operational efficiency and a 27% reduction in downtime across use cases.” Saying also that “edge computing platforms are evolving rapidly to support AI workloads, with specialized hardware and software stacks optimized for inference.”

Key Drivers of Edge AI Growth

The rapid acceleration of edge AI adoption is being driven by several converging factors:

- Maturation of AI Technology: Breakthroughs in model optimization and hardware acceleration have made complex AI workloads viable on edge devices.

- Proliferation of IoT Devices: The explosion of connected devices is generating unprecedented volumes of data that must be processed locally.

- 5G Deployment: The rollout of 5G networks is enabling more sophisticated edge computing architectures with improved connectivity and device orchestration.

- Regulatory Pressure: Increasing data sovereignty requirements are forcing organizations to process sensitive data locally rather than in centralized clouds.

- Real-Time Requirements: Growing business demands for instantaneous insights and actions that cannot tolerate cloud roundtrip latency.

These market trends make clear that edge AI is not merely a technological curiosity but a foundational capability that will reshape enterprise computing architectures over the next decade.

The message from industry analysts is clear — while cloud platforms will continue to play a crucial role in AI training and development, edge computing will become the dominant paradigm for AI inference in enterprise environments. The convergence of edge computing and artificial intelligence represents one of the most significant technological shifts in enterprise IT.

Hyperscaler Architecture vs. Edge Computing Architecture

Why Cloud Architectures Fall Short at the Edge

Hyperscalers like Amazon Web Services, Microsoft Azure, and Google Cloud have built impressive infrastructure optimized for centralized cloud computing. These architectures were fundamentally designed with different constraints and objectives than those required for effective edge computing. It’s important to note that there are many “Cloud Experience” characteristics that cannot be lost and must carry over from cloud data centers to edge computing infrastructure and operations, including multi-tenancy, resiliency to failure, security, automation, and API-driven operations. Some of the key differences, which drive the need for a new edge computing stack include:

Hyperscaler Architecture Characteristics:

- Centralized Resource Pools: Massive data centers with homogeneous hardware

- High-Bandwidth Connectivity: Assumes reliable, high-speed network connectivity

- Virtualization-Heavy: Multiple abstraction layers to enable multi-tenancy

- Elastic Resource Allocation: Dynamic scaling of resources based on demand

Managed Services Focus: Emphasis on fully managed platform capabilities - Unified Control Planes: Centralized management and orchestration

Edge Computing Requirements:

- Distributed Infrastructure: Heterogeneous hardware spread across diverse locations

- Connectivity-Optional: Must function with intermittent or bandwidth-constrained networks

- Lightweight Virtualization: Optimized for resource-constrained environments

- Fixed Resource Utilization: Often operates on dedicated hardware with set capabilities

- Autonomy-Focused: Must function independently without constant central control

- Federated Management: Distributed control with local decision-making capability

The “Edge Gap” in Cloud Platforms

While hyperscalers have introduced “edge” offerings that claim to deliver the “cloud experience” for edge computing, these solutions often extend existing “cloud architecture” to edge locations but have not fundamentally rethought the architecture for edge requirements. This creates what is frequently referred to as the “edge gap”. Edge gap signifies the disparity between the perceived value and potential of edge computing and the practical challenges and complexities hindering its widespread and effective deployment.

“Edge computing requires platforms that enable edge-native workloads, provide zero-touch management and integrate between the cloud and the edge. I&O leaders should choose edge computing platforms that are extensible for new and evolving workloads — including edge AI.” – GARTNER

The Need for Purpose-Built Edge Platforms

To effectively support AI inference at the edge, enterprises need computing platforms specifically designed for edge requirements:

- Hardware Diversity Support: The ability to manage a heterogeneous fleet of computing devices, from industrial gateways to rack servers

- Zero-Trust Security: Security architectures that assume hostile physical environments and untrusted networks

- Autonomous Operation: Capability to function independently during network outages or bandwidth constraints

- Lightweight Resource Footprint: Minimal overhead to maximize resources available for AI workloads

- Field Serviceability: Designed for environments without IT staff, through remote management capabilities

- Edge-Native Orchestration: Workload management optimized for distributed deployment and limited resources

These architectural requirements highlight why purpose-built edge computing platforms are essential for enterprises seeking to deploy AI inference at scale across distributed environments.

Financial Benefits and ROI of Edge AI

The Business Case for Edge AI Investment

Deploying AI inference capabilities at the edge represents not just a technological advancement but a strategic business investment with quantifiable financial returns. Analysis from multiple industries reveals several key financial benefits:

1Operational Cost Reduction

By processing data at the edge, enterprises can dramatically reduce cloud computing and data transmission costs:

- Bandwidth Savings: A medium-sized manufacturing facility generating 1TB of sensor data daily could save $180,000-$300,000 annually in data egress and storage costs1 by filtering and processing that data locally, transmitting only actionable insights.

- Cloud Compute Reduction: Organizations adopting edge AI can anticipate a decrease in cloud computing expenses for applicable workloads. This reduction will vary based on the quantity of applications and AI inferences transitioned from the cloud to edge computing.

- Infrastructure Optimization: Edge AI-powered predictive maintenance can decrease infrastructure downtime by an estimated 25-45% through early identification of potential failures and automated responses

2Revenue Enhancement

Edge AI creates opportunities for new or enhanced revenue streams:

- Service Monetization: Equipment manufacturers implementing edge AI for predictive maintenance can convert traditional product sales into service-based revenue, significantly increasing customer lifetime revenue opportunity.

- Data Product Creation: Retailers using edge AI for in-store analytics can create anonymized shopping behavior data products, generating incremental annual revenue per square foot.

- Premium Offerings: Leveraging specialized, proprietary data at the edge, allows businesses to create new services that deliver customer value, competitive advantage and new revenue.

3Speed to Insights

Edge reduces latency and AI inference accelerates time to understanding critical information local data provides:

- Faster Feedback: Edge AI solutions reduce latency between data creation and critical decision making.

- Realtime Response Times: Edge AI Inference provides much faster response times, allowing early detection of important quality issues before they disrupt product delivery

4Risk Mitigation

Edge AI provides financial benefits through risk reduction:

- Regulatory Compliance: Processing sensitive data locally can reduce corporate and regulatory compliance-related costs, especially in highly regulated industries.

- Operational Continuity: AI systems that function during network outages deliver business continuity benefits valued at $45,000-$120,000 per hour of prevented downtime in critical operations.

ROI Calculation Framework

Based on analysis across multiple industries, enterprises can expect the following ROI timeline for edge AI deployments:

- Short-term ROI (0-12 months): Primarily driven by operational cost reductions, especially in bandwidth and cloud computing expenses.

- Medium-term ROI (12-24 months): Realized through operational improvements and initial monetization of enhanced capabilities.

- Long-term ROI (24+ months): Derived from strategic competitive advantage, new business models, and ecosystem position.

Taking advantage of the savings and optimizations described above, organizations deploying edge AI should expect to achieve initial breakeven on their investments within 2 years, with subsequent savings to fund further expansion.

Industry Use Cases

Transforming Industries Through Edge AI

Edge AI is revolutionizing operations across multiple verticals, creating competitive advantages for early adopters. The following industry examples demonstrate how enterprises are monetizing their proprietary data through edge computing for AI inference:

Automotive

Advanced Driver Assistance Systems (ADAS) and Autonomous Vehicles

Tesla’s implementation of edge computing for their Autopilot system demonstrates the power of edge AI in automotive applications. By processing camera and sensor data directly in vehicles using custom hardware, Tesla vehicles can make driving decisions in milliseconds rather than seconds, a critical difference for safety-critical applications. This edge-first approach has allowed Tesla to build a proprietary data advantage, with their fleet collecting over 35 million miles of driving data daily, processed at the edge and selectively uploaded to improve central models.

Manufacturing Quality Control

A leading automotive manufacturer has deployed an edge AI solution across its manufacturing plants that uses computer vision to detect quality issues in real-time during vehicle assembly. This system operates on a distributed edge computing platform that processes over 10TB of image data daily without sending it to the cloud.

Financial Impact: By accurately predicting machine failures and scheduling proactive maintenance, the manufacturer anticipates annual cost savings of $2 million and a 20% increase in production efficiency. With an initial investment of $500,000 in AI infrastructure and personnel, the project is expected to yield an ROI of 300% within the first year.

Oil and Gas

Predictive Maintenance for Critical Equipment

Shell has pioneered edge AI deployment across its upstream operations, installing edge computing systems on offshore platforms to analyze vibration, pressure, and temperature data from critical equipment. These systems can detect potential failures days before they would occur, allowing for planned maintenance rather than emergency repairs. The solution processes over 10 million sensor readings daily at the edge, with only summarized insights transmitted to central operations.

Real-time Drilling Optimization

Baker Hughes deployed edge AI systems at drilling sites to optimize drilling parameters in real-time, analyzing downhole telemetry data to adjust operations for changing geological conditions. The system processes data with sub-second latency, a requirement impossible to meet with cloud-based solutions given remote drilling locations.

Financial Impact: Shell reported a 20% decrease in maintenance costs, saving an estimated $2 billion annually and a 35% reduction in unplanned downtime, which translates to a 5% boost in operational uptime and a 25% improvement in maintenance staff efficiency

Retail

Customer Experience Creates Competitive Advantage

Customer engagement and user experience have become more important for retail profitability than product and price. To gain competitive advantage, retailers are blending customer experience across in person, online and mobile interactions optimizing customer experiences to individual preferences. This real time synchronization requires an ability to access and process massive amounts of data at the retail edge and delivers powerful new capabilities – smart shelves, smart checkout, interactive displays, AI/ML computer vision, and real time video analytics – without the expense and latency of sending the data to the cloud.

Computer Vision for Retail Store Operation and Security

By 2033, visual product recognition systems at the retail edge will account for one-third of store product identification, stock, audit and check-out transactions, compared with less than 1% in 2024. Computer vision offers real time insights for well informed, virtually immediate operational decisions. Security is one of the most critical areas where edge computing and AI is delivering real time insights. Cameras with edge computing-based AI applications deliver edge processing which can detect suspicious activities, like shoplifting or product tampering, as it happens, a major benefit over traditional surveillance systems, which must rely on delayed, cloud-based processing.

Financial Impact: Retailers who implement visual recognition and Edge AI technologies will reduce costs through automation, drive new revenue through an improved customer experience and by avoiding stock-outs, all while optimizing security that reduces shrinkage and maximizes profitability.

Quality Assurance Through Computer Vision

A leading computer chip manufacturer deployed edge AI systems across semiconductor manufacturing lines for defect detection, with computer vision models running directly on factory floors. The system processes over 3TB of image data daily without cloud transmission, identifying defects with almost perfect accuracy to significantly reduce quality escapes. The edge-based architecture allowed deployment in facilities with strict IP protection requirements where cloud connections would be prohibited.

Predictive Maintenance at Scale

A global manufacturer implemented an edge AI platform across its manufacturing facilities to enable predictive maintenance for production equipment. The system analyzes vibration, acoustic, and thermal data from thousands of machines, processing over 5TB of data daily at the edge. The solution has reduced unplanned downtime and has significantly extended the operational life of manufacturing equipment.

Financial Impact: Manufacturers implementing edge AI for quality control and predictive maintenance realize average cost savings of $240,000-$360,000 per production line annually.

CIO Survey Provides Key Insights

ZEDEDA partnered with Censuswide between February 26 and March 4, 2025 to conduct a survey of 301 US-based CIOs, specifically exploring enterprise investments in Edge AI. Key findings and link to complete survey results below:

Customer Experience and Predictive Maintenance are Largest Initial Investment Areas

The primary focus of initial edge AI investments is on enhancing customer experience, risk management, cost reduction, and process acceleration. As expected, retail has been keenly focused on customer experience: 93% of retail CIOs state that they have deployed some type of edge AI for this purpose, compared to 80% across all sectors. However, future investment priorities are shifting towards cost reduction and risk management, process acceleration, and customer experience. Looking ahead, cost reduction (74%) and risk management (73%) are the leading priorities for future edge AI deployments in the next 12-24 months. Notably, manufacturing is prioritizing process acceleration. 82% of manufacturing companies in this sector are planning to deploy edge AI for this purpose in the next 12-24 months, compared to 68% across all sectors.

Security and Privacy are Both Key Drivers and Top Challenges for Edge AI

Security and privacy play a dual role in edge AI. CIOs cite improving security and data privacy (53%) as the top reason for investing in edge AI over cloud-based AI. Yet, security risks and data protection concerns (42%) are also identified as the leading challenges in edge AI deployments.

Multimodal AI Emerges as the Preferred Edge AI Model

Multimodal AI, which combines speech, text, and vision, is the most popular AI model currently running or planned for deployment at the edge. 60% of CIOs surveyed are running/planning to run multimodal AI at the edge, similar to those running it in the cloud (59%). After multimodal Al models, speech recognition models (52%) were the next most popular. Notably, large language models (LLMs) ranked as popular as computer vision models for the edge, at 47% of currently or planned deployments.

“Edge computing is poised to redefine how businesses leverage real-time data, and its future hinges on tailored, industry-specific solutions that address unique operational demands. We’re seeing service providers double down on investments—building out low-latency networks, enhancing AI-driven edge analytics, and forging partnerships to deliver scalable, secure infrastructure. These efforts are critical to realizing the full potential of edge computing, enabling everything from smarter manufacturing floors to responsive healthcare systems, and ultimately driving a new wave of innovation across verticals.”

— DAVE MCCARTHY, RESEARCH VICE PRESIDENT CLOUD AND EDGE SERVICES AT IDC

IDC WORLDWIDE EDGE COMPUTING SPENDING GUIDE

Primary Challenges in Edge AI Deployment

Overcoming Barriers to Edge AI Adoption

While the benefits of edge AI are compelling, enterprises face significant challenges when implementing these solutions at scale. Understanding these challenges is critical for developing effective deployment strategies:

1Security Vulnerabilities

Edge deployments create expanded attack surfaces that introduce new security challenges:

- Physical Access Threats: Edge devices often operate in physically accessible locations, creating risks of tampering or device compromise

- Device Authentication: Managing secure identity for thousands of distributed devices

- Data Protection: Ensuring sensitive data remains secure when processed outside secure data centers

- Threat Detection: Identifying and responding to security incidents across a distributed environment

Organizations report that securing edge devices is among their top three security concerns, and security incidents involving edge computing assets are on the rise. According to a recent survey of CIOs on edge AI, security risks and data protection concerns were the leading challenges in edge AI deployments.

3Inconsistent Infrastructure

Edge environments lack the standardization of cloud data centers:

- Network Variability: Dealing with inconsistent bandwidth, latency, and reliability

- Power Constraints: Adapting to limited or unreliable power in remote locations

- Environmental Factors: Accommodating temperature, humidity, and physical space limitations

- Local Computing Resources: Working with fixed, often limited computational capabilities

Infrastructure inconsistency is a major barrier to edge AI deployments, with network reliability being one of the top challenges.

2Operational Complexity

Managing edge computing at enterprise scale introduces substantial operational challenges:

- Device Heterogeneity: Supporting diverse hardware platforms with varying capabilities

- Deployment Logistics: Coordinating installation and configuration across geographically dispersed locations

- Software Updates: Managing OS and application updates across thousands of devices

- Monitoring at Scale: Maintaining visibility into the health and performance of distributed systems

Organizations managing more than 500 edge devices report spending twice the time on operational management than anticipated in initial planning.1

4Data Management Challenges

Edge AI creates unique data management requirements:

- Data Synchronization: Keeping edge and cloud environments in sync

- Data Governance: Maintaining control over data across distributed locations

- Storage Limitations: Working within the constraints of local storage capacity

- Data Quality: Ensuring consistent data quality across diverse collection points

Organizations implementing edge AI spend significantly more project time than anticipated addressing data management challenges.

5Skills Shortage

The intersection of edge computing and AI creates demand for specialized expertise:

- Edge Infrastructure Skills: Finding personnel with experience in distributed system architecture

- Edge AI Development: Sourcing talent capable of optimizing AI models for edge constraints

- Cross-Domain Knowledge: Requiring rare combinations of IT, OT, and data science expertise

Many organizations face significant challenges finding personnel with appropriate skills for edge AI implementation.

These challenges highlight why purpose-built edge computing platforms have become essential for enterprises seeking to deploy AI inference at scale. The right platform can address many of these challenges through standardized approaches to security, operations, and infrastructure management.

Building a Consistent Edge Platform

Overcoming Edge Challenges with Purpose-Built Solutions

To address the challenges outlined in the previous section, enterprises need an consistent edge computing platform that can provide standardized capabilities across diverse environments. Two key technologies have emerged as foundational elements of such platforms:

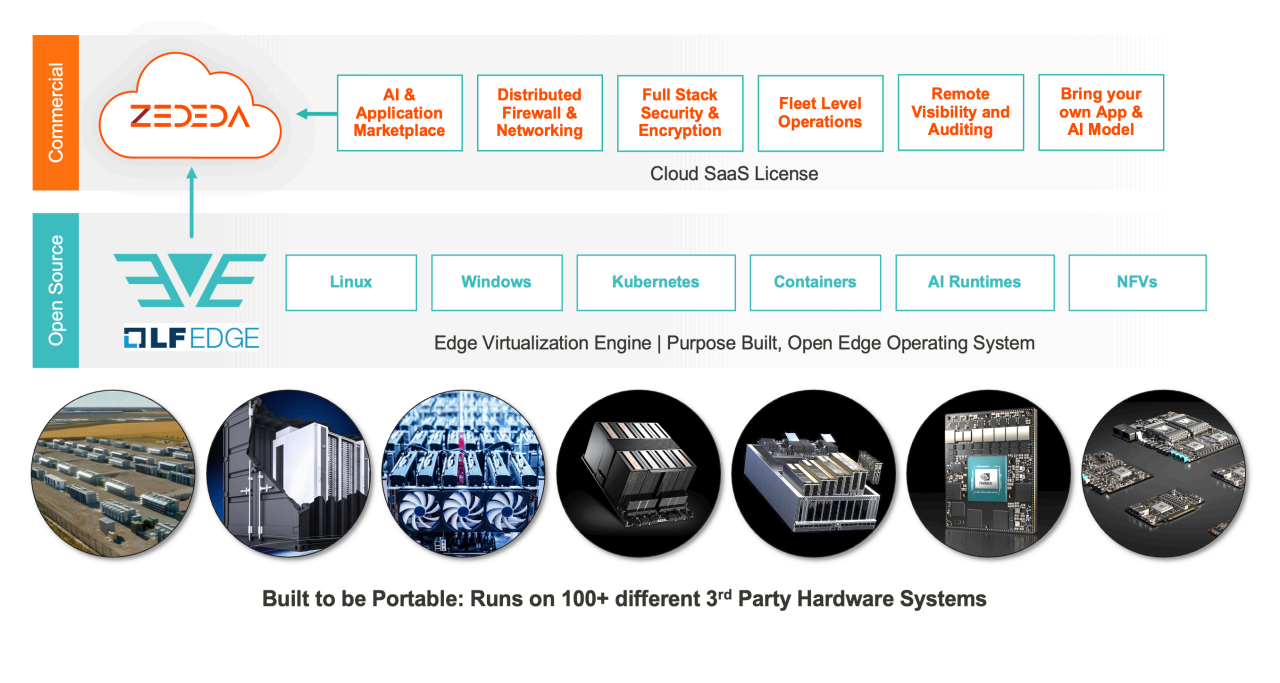

Edge Virtualization Engine (EVE)

The Linux Foundation Edge community project, EVE (Edge Virtualization Engine) is an open-source edge computing operating system designed specifically for distributed edge infrastructure:

- Hardware Abstraction: EVE provides a consistent interface across diverse hardware, from IoT gateways to edge servers

- Zero-Trust Security: Built with a security-first architecture that assumes hostile environments

- Lightweight Virtualization: Uses lightweight containers and VMs optimized for resource-constrained devices

- Autonomous Operation: Designed to function during network outages with local decision-making capability

- Orchestration API: Offers comprehensive remote management interface

“The intelligent edge requires solutions that can meet the real-time requirements of safety-critical environments. Our work with ZEDEDA combines Wind River’s expertise in mission-critical edge computing with ZEDEDA’s streamlined orchestration capabilities. This collaboration is particularly valuable for industries deploying AI in environments where reliability and security are non-negotiable.”

– Avijit Sinha, Sr. Vice President, Strategy and Global Business Development at Wind River

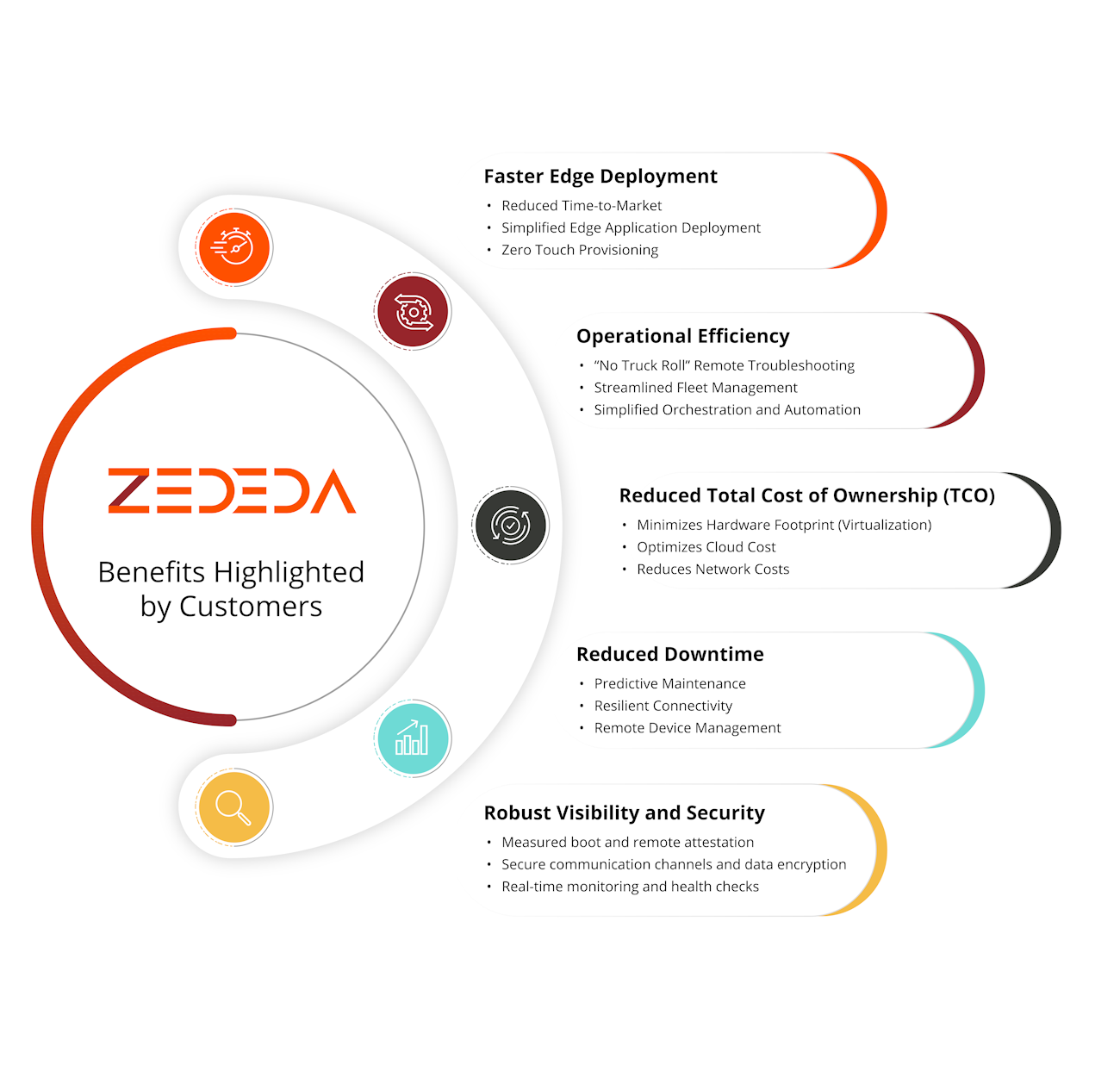

ZEDEDA Edge Computing Platform

Building on the EVE foundation, ZEDEDA has developed an enterprise-grade edge computing platform specifically designed for deploying and managing AI workloads at the edge:

- Zero Touch Provisioning: Enables rapid deployment without on-site technical personnel

- Application Orchestration: Simplifies management of AI applications across distributed locations • Hardware-Agnostic: Supports diverse compute platforms from major OEMs

- Secure Supply Chain: Provides verified measured boot and trusted execution environment

- AI-Optimized: Consistent platform capabilities including role-based access control, security, infrastructure orchestration, application update, rollback and more for AI model deployment and management

The Value of a Consistent Edge Platform

Leveraging a purpose-built edge computing platform like ZEDEDA delivers significant advantages for AI inference deployment:

- Unified Operations: A single management interface for all edge deployments, regardless of location, hardware, or scale

- Risk Reduction: Standardized zero trust security and compliance controls across the entire edge estate

- Deployment Acceleration: Reduced time-to-value through streamlined automated provisioning and management

- Cost Efficiency: Lower operational overhead through reduced staffing, automation, and simplified management

- Future-Proofing: Ability to adapt to evolving hardware and software without architectural changes

“Leveraging ZEDEDA’s edge security and virtualization platform, we aim to simplify and accelerate the deployment, management and orchestration of intelligent applications closer to data sources.. This collaboration represents a significant step toward the widespread adoption of enterprise edge computing.”

– Zach Shelby, CEO and Co-Founder, Edge Impulse

Key Takeaways and Additional Resources

Summary: The Business Imperative of Edge AI

As we’ve explored throughout this guide, edge computing for AI inference represents a transformative opportunity for enterprise business leaders to monetize proprietary data and create sustainable competitive advantage. The key takeaways include:

- Edge AI is Inevitable: The shift of AI workloads to the edge is not merely a technology trend but a business imperative driven by latency, bandwidth, privacy, and cost considerations.

- Purpose-Built Platforms are Essential: The unique requirements of edge computing demand specialized platforms different from those offered by traditional cloud providers.

- The Value Proposition is Clear: Organizations implementing edge AI are realizing substantial ROI through operational cost reduction, new revenue generation, and competitive differentiation.

- Implementation Challenges are Surmountable: With the right platform and strategic approach, enterprises can successfully navigate the complexities of edge AI deployment.

- The Time to Act is Now: With Gartner predicting 60% edge computing adoption of composite AI by 2029, organizations that delay risk falling behind competitors who are already building these capabilities.

Additional Resources

For technology professionals tasked with building edge computing architectures and preparing business cases, the following resources provide valuable additional information:

Technical Resources

- Linux Foundation Edge Documentation – Comprehensive technical information on the EVE platform

- ZEDEDA Developer Help Center – Technical guidance for implementing edge computing with ZEDEDA

- ZEDEDA Edge Academy – Featured ZEDEDA Training Course Catalog

- ZEDEDA Web Site – AI at the Edge Use Cases

- NVIDIA Jetson AI Platform – Hardware and software resources for edge AI deployments

Business & Strategy Resources

- Gartner: “Market Guide for Edge Computing” – Analysis of edge computing market trends

- ServiceNow and ZEDEDA Partner to Bring the Power of ServiceNow to the Edge

- A Buyers Guide to Edge Computing Platforms

- IDC Worldwide Edge Computing Spending Guide Media Release

- The ROI of AI Investments in Manufacturing, Data Prophet, May 2024

- 2025 ZEDEDA Edge AI Survey of 301 US-based CIOs

- How AI is Fueling Efficiency: Lessons from Shell’s Gas Industry Transformation, Medium October 2024

ZEDEDA makes Edge AI computing effortless, open and intrinsically secure — extending the cloud experience to the edge. ZEDEDA reduces the cost of managing and orchestrating distributed edge infrastructure and applications while increasing visibility, security and control. ZEDEDA delivers instant time to value, has tens of thousands of nodes under management and is backed by world-class investors with teams in the United States, Germany and India. For more information, visit www.ZEDEDA.com